I’ve been reading about LSM trees for a while but never really dived into any implementation. I thought of starting with an existing codebase. RocksDB came to mind since I’ve used it before at work, but its codebase is quite large. So, I decided to start with LevelDB, which has a much smaller codebase and, at first glance, looks more straightforward to understand.

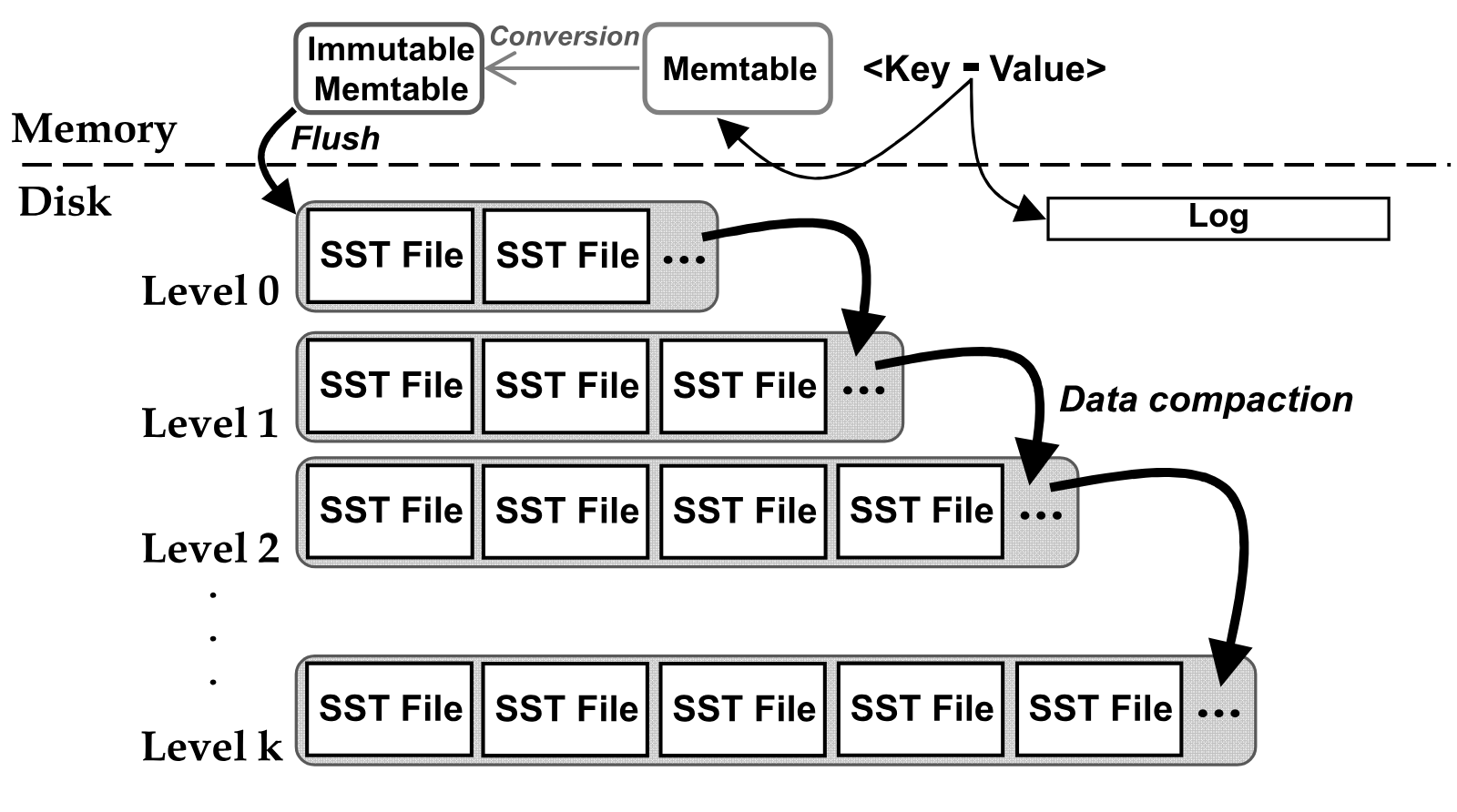

Before I delve into LevelDB, here’s a brief introduction to LSM trees.

LSM Tree background

LSM-based key-value stores are great for writing data. When new data is added, it first goes into a fast, in-memory structure. Once this space fills up, the data is flushed to disk as a sorted file. Over time, these sorted files accumulate on the disk. The system periodically merges these files, cleaning up outdated versions and creating a more compact, organized structure for efficient reads. This approach, combining in-memory buffering with sorted file merging on disk, optimizes write speed while maintaining reasonable read performance.

- In-memory Buffering: New data (key-value pairs) are first written to a fast, in-memory data structure.

- Write-Ahead Log (WAL): Before or concurrently with updating the in-memory buffer, the data is also appended to a Write-Ahead Log (WAL) on disk. This log acts as a temporary record of the writes and ensures data durability in case of a system crash.

- Flush to Disk: Once the in-memory buffer reaches its capacity, the data is flushed to disk as a new sorted file (SSTable).

- Background Compaction: In the background, a separate process merges SSTables and the WAL. During compaction, outdated versions of keys are discarded, data is re-sorted, and written to a new, more efficient SSTable. The WAL entries corresponding to the included data in the new SSTable are then cleaned up.

Including the WAL ensures that even if a crash occurs after data is written to the in-memory buffer but before it’s flushed as an SSTable, the data can still be recovered during the next startup by replaying the unprocessed entries in the WAL.

LevelDB

Here is a high-level overview diagram of LevelDB. We’ll discuss all the components in detail with multiple examples below. (image source)

Lets install leveldb on a linux machine:

git clone --recurse-submodules https://github.com/google/leveldb.git

mkdir -p build && cd build

cmake -DCMAKE_BUILD_TYPE=Release .. && cmake --build .

// Inside build directory:

-rw-rw-r-- 1 ddk ddk 725606 May 4 13:04 libleveldb.a

While compiling our first leveldb program, we need to link to this static library libleveldb.a using -lleveldb.

First Program:

/* file: example1.cpp */

#include <iostream>

#include <string>

#include <random>

#include <leveldb/db.h>

// Function to generate random lowercase English letters

char randomLowercaseLetter() {

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<> dis('a', 'z');

return static_cast<char>(dis(gen));

}

// Function to generate a random key of size 4 letters

std::string generateRandomKey() {

std::string key;

for (int i = 0; i < 4; ++i) {

key += randomLowercaseLetter();

}

return key;

}

int main() {

// Open LevelDB database

leveldb::DB* db;

leveldb::Options options;

options.create_if_missing = true;

/* Ignore these parameters for now */

options.write_buffer_size = 512;

/* Ignore these parameters for now */

options.block_size = 512;

options.compression = leveldb::CompressionType::kNoCompression;

leveldb::Status status = leveldb::DB::Open(options, "/tmp/testdb-8", &db);

if (!status.ok()) {

std::cerr << "Unable to open database: " << status.ToString() << std::endl;

return 1;

}

int num_keys = 2;

for (int i = 0; i < num_keys; ++i) {

std::string key = generateRandomKey();

std::string value = "Value_" + std::to_string(i);

// Insert key-value pair into the database

status = db->Put(leveldb::WriteOptions(), key, value);

if (!status.ok()) {

std::cerr << "Error while writing to database: " << status.ToString() << std::endl;

delete db;

return 1;

}

}

// Close the database

delete db;

std::cout << "Successfully wrote "<< num_keys <<" key-value pairs to LevelDB." << std::endl;

return 0;

}

In the above example, we are inserting two key-value pairs into the database. Let’s examine the database files generated after executing the program.

// Compile command using g++

g++-11 example1.cpp -I<path to leveldb/include> -L<path to leveldb/build> -lleveldb

DB Files:

drwxr-xr-x 2 ddk ddk 4096 May 25 10:34 .

drwxrwxrwt 22 root root 12288 May 25 10:38 ..

-rw-r--r-- 1 ddk ddk 66 May 25 10:34 000003.log

-rw-r--r-- 1 ddk ddk 16 May 25 10:34 CURRENT

-rw-r--r-- 1 ddk ddk 0 May 25 10:34 LOCK

-rw-r--r-- 1 ddk ddk 151 May 25 10:34 LOG

-rw-r--r-- 1 ddk ddk 50 May 25 10:34 MANIFEST-000002

We see there is one .log file. When a key-value pair is inserted, LevelDB adds it to the memtable and also records it in the log file. This log file helps LevelDB recover key-values in case of a crash. In the above code, we added just two key-value pairs, which is not enough to fill one memtable. Therefore, the memtable is not yet converted to an SSTable to be added to the level hierarchy. In this case, the log file ensures the key-value pairs are persistent.

Today, we’ll check the .log file only. Let’s examine the contents of 000003.log file.

❯ xxd /tmp/testdb-8/000003.log

00000000: 60ed 8757 1a00 0101 0000 0000 0000 0001 `..W............

00000010: 0000 0001 0462 6966 6407 5661 6c75 655f .....bifd.Value_

00000020: 302a 9d78 101a 0001 0200 0000 0000 0000 0*.x............

00000030: 0100 0000 0104 6b77 6867 0756 616c 7565 ......kwhg.Value

00000040: 5f31 _1

From the above hex dump, the portion for the first record is:

60ed 8757 1a00 0101 0000 0000 0000 0001 0000 0001 0462 6966 6407 5661 6c75 655f 30

This series of bytes follows the layout mentioned here.

Also, this code parses the header (starting 7 bytes) and verifies the checksum.

- Checksum:

60ed 8757 - Length:

1a00(little endian) = 26 - Type:

01

The remaining 26 bytes are as follows:

01 0000 0000 0000 0001 0000 0001 0462 6966 6407 5661 6c75 655f 30

- The starting 8-byte value is the sequence number for the first key-value pair. This value is stored in little-endian format. Therefore, the first sequence number is 1.

01 0000 0000 0000 00

- The next 4 bytes contain the number of key-value pairs present in this record. LevelDB has a concept of a write batch, which may contain one or more key-value pairs. After reading this code, it seems that write batches contain multiple key-value pairs only in multi-threaded situations. In our example, we are writing to the database using only one application thread, so any write batch should contain only one key-value pair. Indeed, the next 4 bytes show a value

01 0000 00= 1 (little endian format).

So, we are left with the last portion of this record:

01 0462 6966 6407 5661 6c75 655f 30

01indicates that it is ofValueType::kTypeValue, which is used internally in LevelDB’s codebase.

// Value types encoded as the last component of internal keys.

// DO NOT CHANGE THESE ENUM VALUES: they are embedded in the on-disk

// data structures.

enum ValueType { kTypeDeletion = 0x0, kTypeValue = 0x1 };

- The length of the key is 4 bytes, represented by

04. Note that the length of the key could be more than 255, so LevelDB has an interesting way of encoding the length of keys and values, which we will discuss in a later post.

Finally, we get to the actual key and value:

62 6966 64= “bifd”5661 6c75 655f 30= “Value_0”

The length of the value is 7 bytes.

There is a tool provided by LevelDB called leveldbutil, which gets generated when you build LevelDB from source. This tool allows us to observe the contents of any files generated by LevelDB.

Let’s use leveldbutil to examine the contents of the log file 000003.log. leveldbutil will be present inside the build directory.

❯ ./leveldbutil dump /tmp/testdb-8/000003.log

--- offset 0; sequence 1

put 'bifd' 'Value_0'

--- offset 33; sequence 2

put 'kwhg' 'Value_1'

This command will output the contents of the log file in a human-readable format, allowing us to see the key-value pairs and other information stored in it.

I’ve also added additional logging to the code to print messages during write operations.

<thread id> <function name> : <line num> ...

130941237274432 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/MANIFEST-000001

130941237274432 Open : 1556 Current version status:

--- level 0 ---

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

130941237274432 Put : 1504 Put - key: bifd

130941237274432 Write : 1240 Adding 1 records to log. last_sequence = 1

130941237274432 Write : 1250 Adding 1 records to mem table. last_sequence = 1

130941237274432 Write : 1282 Current version status:

--- level 0 ---

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

130941237274432 Put : 1504 Put - key: kwhg

130941237274432 Write : 1240 Adding 1 records to log. last_sequence = 2

130941237274432 Write : 1250 Adding 1 records to mem table. last_sequence = 2

130941237274432 Write : 1282 Current version status:

--- level 0 ---

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

Successfully wrote 2 key-value pairs to LevelDB.

As observed, the log file records are added first before the key-value pairs are inserted into the memtable. This protects against data loss in the event of system crashes or failures. It’s worth noting that at this stage, no SST files exist in the system. SST files are stored within the levels of LevelDB.

Now, let’s explore a different example where we open the same database and read keys from it.

/* file: example2.cpp */

#include <iostream>

#include <string>

#include <leveldb/db.h>

int main() {

// Open LevelDB database

leveldb::DB* db;

leveldb::Options options;

options.create_if_missing = true;

options.write_buffer_size = 512;

options.block_size = 512;

options.compression = leveldb::CompressionType::kNoCompression;

leveldb::Status status = leveldb::DB::Open(options, "/tmp/testdb-8", &db);

if (!status.ok()) {

std::cerr << "Unable to open database: " << status.ToString() << std::endl;

return 1;

}

std::string value;

status = db->Get(leveldb::ReadOptions(), "abcd", &value);

if (!status.ok()) {

std::cerr << "Key does not exits: " << status.ToString() << std::endl;

}else {

std::cout << "Value for key: abcd is: " << value << std::endl;

}

status = db->Get(leveldb::ReadOptions(), "bifd", &value);

if (!status.ok()) {

std::cerr << "Key does not exits: " << status.ToString() << std::endl;

}else {

std::cout << "Value for key: bifd is: " << value << std::endl;

}

// Close the database

delete db;

return 0;

}

In the above example, we are reading two keys from the database. The first key, “abcd,” is expected to not exist, while the second key, “bifd,” should exist.

Here the trace output after running this program.

❯ ./a.out

124976298780480 BuildTable : 28 Created table file : /tmp/testdb-8/000005.ldb

124976298780480 WriteLevel0Table : 525 Writing to Level0 files, table: 5 of file size: 145

124976298780480 Recover : 384 Recovery done

124976298780480 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/000003.log

124976298780480 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/MANIFEST-000002

124976298780480 Open : 1556 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

Key does not exits: NotFound:

Value for key: bifd is: Value_0

And the contents of the db directory

❯ ls -all

total 36

drwxr-xr-x 2 ddk ddk 4096 May 25 17:32 .

drwxrwxrwt 23 root root 12288 May 25 17:32 ..

-rw-r--r-- 1 ddk ddk 145 May 25 17:32 000005.ldb

-rw-r--r-- 1 ddk ddk 0 May 25 17:32 000006.log

-rw-r--r-- 1 ddk ddk 16 May 25 17:32 CURRENT

-rw-r--r-- 1 ddk ddk 0 May 25 17:20 LOCK

-rw-r--r-- 1 ddk ddk 452 May 25 17:32 LOG

-rw-r--r-- 1 ddk ddk 151 May 25 17:20 LOG.old

-rw-r--r-- 1 ddk ddk 81 May 25 17:32 MANIFEST-000004

As observed from the log traces, a new SST file is created from with the file name /tmp/testdb-8/000005.ldb. This SST file is then added to Level 0, and the old log file (000003.log) is deleted. The creation of this SST file is based on the existing log file, meaning the log file is converted to an SST file during recovery if the log file was not empty.

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

This is a concise representation of an SST file, where the file number is 5. File numbers are monotonically increasing and are persisted in the MANIFEST file, a topic we’ll discuss in a later post.

The segment 'bifd':1:1 -> 'kwhg':2:1 indicates that the smallest key is ‘bifd’ with sequence number 1 and value type 1 (or kTypeValue). The largest key in this file is ‘kwhg’ with sequence number 2 and value type 1.

A new log file, 000006.log, is created, while the old log file is deleted.

Let’s examine the contents of 000005.ldb using leveldbutil:

❯ ./leveldbutil dump /tmp/testdb-8/000005.ldb

'bifd' @ 1 : val => 'Value_0'

'kwhg' @ 2 : val => 'Value_1'

❯ ./leveldbutil dump /tmp/testdb-8/000006.log

We can see that there are only two key-value pairs in 000005.ldb, and 000006.log is empty, as expected, since no new keys were inserted.

Additionally, you can try running the second program multiple times and observe that each time, the old log file is deleted and a new log file is created.

I was curious to know why it is required to create a new log file every time LevelDB recovers. However, it seems there is an option to reuse existing log files as well. This option,

reuse_logs, is defaulted tofalse, which is why we are seeing new log files every time LevelDB recovers and the old log files are converted to SST files.

Let’s fill the memtable by adding 12 new keys. You can achieve this by modifying the num_keys variable in your example1.cpp code to 12.

❯ ./a.out

137757878200128 Recover : 384 Recovery done

137757878200128 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/MANIFEST-000004

137757878200128 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/000006.log

137757878200128 Open : 1556 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: pmyh

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 3

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 3

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: itpe

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 4

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 4

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: tosu

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 5

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 5

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: qocp

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 6

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 6

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: bcct

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 7

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 7

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: zlzj

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 8

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 8

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: sxxg

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 9

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 9

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: brfd

137757878200128 MakeRoomForWrite : 1378 There is no more space in the current 1st mem table. Creating a new log file for the 2nd memtable with log number : 9

137757878200128 MakeRoomForWrite : 1382 Created new log file /tmp/testdb-8/000009.log

137757878200128 MakeRoomForWrite : 1412 Scheduling compaction ...

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 10

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 10

137757878200128 Write : 1282 Current version status: --- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757871568448 BackgroundCompaction : 708 Starting Background Compaction

137757871568448 BackgroundCompaction : 709 Before compaction: Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: xlww

137757871568448 CompactMemTable : 553 Compacting mem table

137757871568448 BuildTable : 28 Created table file : /tmp/testdb-8/000010.ldb

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 11

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 11

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: xbmp

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 12

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 12

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: fzkj

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 13

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 13

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757878200128 Put : 1504 Put - key: zmqx

137757878200128 Write : 1240 Adding 1 records to log. last_sequence = 14

137757878200128 Write : 1250 Adding 1 records to mem table. last_sequence = 14

137757878200128 Write : 1282 Current version status:

--- level 0 ---

5:145['bifd':1:1 -> 'kwhg':2:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

137757871568448 WriteLevel0Table : 525 Writing to Level0 files, table: 10 of file size: 256

Successfully wrote 12 key-value pairs to LevelDB.

A couple of noticeable things happened this time. During the insertion of the key “brfd”, LevelDB determined that there was no additional space in the memtable. Consequently, LevelDB switched to a second memtable and scheduled compaction to move the first memtable to a new SST file. This new SST file will typically be added to Level 0. Note that a new log file, 000015.log, was also created for the second memtable. LevelDB maintains two memtables, with one active at a time while the other may be getting flushed to disk.

Additionally, note that the thread ID in the following two trace lines is different:

137757871568448 BackgroundCompaction : 708 Starting Background Compaction

137757878200128 Put : 1504 Put - key: xlww

This indicates that the compaction occurs in a different thread, which is managed by LevelDB.

However, we haven’t observed any traces indicating new SST files being added to the leveled files. This could be due to the application thread ending before the compaction process completed.

Lets check the database files

total 48

drwxr-xr-x 2 ddk ddk 4096 May 25 17:59 .

drwxrwxrwt 23 root root 12288 May 25 18:04 ..

-rw-r--r-- 1 ddk ddk 145 May 25 17:32 000005.ldb

-rw-r--r-- 1 ddk ddk 231 May 25 17:59 000008.log

-rw-r--r-- 1 ddk ddk 167 May 25 17:59 000009.log

-rw-r--r-- 1 ddk ddk 256 May 25 17:59 000010.ldb

-rw-r--r-- 1 ddk ddk 16 May 25 17:59 CURRENT

-rw-r--r-- 1 ddk ddk 0 May 25 17:20 LOCK

-rw-r--r-- 1 ddk ddk 454 May 25 17:59 LOG

-rw-r--r-- 1 ddk ddk 452 May 25 17:32 LOG.old

-rw-r--r-- 1 ddk ddk 81 May 25 17:59 MANIFEST-000007

We can observe two log files: 000008.log, which is older, and 000009.log, the newer one (as indicated by the traces above). Ideally, after memtable compaction, 000008.log should have been deleted because the new SST file, 000010.ldb, should contain all the records present in 000008.log. Let’s verify this first using leveldbutil.

❯ ./leveldbutil dump /tmp/testdb-8/000008.log

--- offset 0; sequence 3

put 'pmyh' 'Value_0'

--- offset 33; sequence 4

put 'itpe' 'Value_1'

--- offset 66; sequence 5

put 'tosu' 'Value_2'

--- offset 99; sequence 6

put 'qocp' 'Value_3'

--- offset 132; sequence 7

put 'bcct' 'Value_4'

--- offset 165; sequence 8

put 'zlzj' 'Value_5'

--- offset 198; sequence 9

put 'sxxg' 'Value_6'

❯ ./leveldbutil dump /tmp/testdb-8/000010.ldb

'bcct' @ 7 : val => 'Value_4'

'itpe' @ 4 : val => 'Value_1'

'pmyh' @ 3 : val => 'Value_0'

'qocp' @ 6 : val => 'Value_3'

'sxxg' @ 9 : val => 'Value_6'

'tosu' @ 5 : val => 'Value_2'

'zlzj' @ 8 : val => 'Value_5'

So, our guess was correct. One of the memtables got compacted, and a new SST file was persisted to the disk. However, the corresponding log file still exists in the database folder. It’s possible that the application ended, and the log file would have been deleted soon after. Additionally, note that SST files (.ldb) are sorted by keys, which is a well-known property of SST files.

Lets try to run it again without inserting any new keys.

❯ ./a.out

130164466403136 BuildTable : 28 Created table file : /tmp/testdb-8/000010.ldb

130164466403136 WriteLevel0Table : 525 Writing to Level0 files, table: 10 of file size: 256

130164466403136 BuildTable : 28 Created table file : /tmp/testdb-8/000011.ldb

130164466403136 WriteLevel0Table : 525 Writing to Level0 files, table: 11 of file size: 212

130164466403136 Recover : 384 Recovery done

130164466403136 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/000009.log

130164466403136 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/MANIFEST-000007

130164466403136 RemoveObsoleteFiles : 289 Deleted /tmp/testdb-8/000008.log

130164466403136 Open : 1556 Current version status:

--- level 0 ---

10:256['bcct':7:1 -> 'zlzj':8:1]

5:145['bifd':1:1 -> 'kwhg':2:1]

11:212['brfd':10:1 -> 'zmqx':14:1]

--- level 1 ---

--- level 2 ---

--- level 3 ---

--- level 4 ---

--- level 5 ---

--- level 6 ---

Successfully wrote 0 key-value pairs to LevelDB.

So, this time, two new SST files were created and added to Level 0, while both 000009.log and 000008.log were deleted. This behavior during LevelDB recovery is expected, as we observed in the previous section.

Up to this point, we’ve only discussed memtable compaction and adding SST files to Level 0. LevelDB also performs compaction of Level 0 files and adds them to Level 1, and so on. However, this topic is not covered in this blog.

You may have noticed how quickly the memtable fills up, even with only a few keys inserted. This is due to the limit set on the write_buffer_size. I forgot to include memtable memory usage in the previous examples, so I created a new database instance and inserted a few keys to fill up the memtable.

134426232211264 Put : 1505 Put - key: phrv

134426232211264 MakeRoomForWrite : 1343 mem_->ApproximateMemoryUsage() = 520

134426232211264 MakeRoomForWrite : 1379 There is no more space in the current 1st mem table. Creating a new log file for the 2nd memtable with log number : 4

As observed, when the memtable memory usage reaches 520, it stops adding keys to that memtable and starts preparing to flush to disk. This is because we have set the write_buffer_size to 512. It’s worth noting that the memtable is an in-memory data structure that uses a skiplist, which in turn uses an arena memory allocator. Therefore, some additional memory is used to maintain the skiplist data structure, contributing to the total memory usage within the target write_buffer_size.

options.write_buffer_size = 512;

I also had to reduce the block size in the arena allocator to 128 bytes. This was necessary because the default value of 4k block size is too large and would fit a lot of key-value pairs, which would not be suitable for our examples. Additionally, it also requires that the write_buffer_size be at least 5k bytes with 4k arena block size. Setting write_buffer_size to 512 bytes while keeping arena block size defaulted to 4k makes LevelDB go into an unexpected loop, with the memtable always full even without adding any keys.

Please check the comment about write_buffer_size to know more about it.

Finally, I want to briefly discuss the multi-threaded key insertion operation in LevelDB.

Here is the user-facing API for writing data:

Status DB::Put(const WriteOptions& opt, const Slice& key, const Slice& value) {

WriteBatch batch;

batch.Put(key, value);

return Write(opt, &batch);

}

Every key-value insertion is first encapsulated into a WriteBatch. This batch is then processed by the following function:

Status DBImpl::Write(const WriteOptions& options, WriteBatch* updates) {

Writer w(&mutex_);

w.batch = updates;

w.sync = options.sync;

w.done = false;

MutexLock l(&mutex_);

writers_.push_back(&w);

// Loop where other writes get blocked and

// waits for the front writer to exit this function

while (!w.done && &w != writers_.front()) {

w.cv.Wait();

}

if (w.done) {

return w.status;

}

....

....

// May temporarily unlock and wait.

Status status = MakeRoomForWrite(updates == nullptr);

if (status.ok() && updates != nullptr) {

// Collect more batches to create a larger batch

WriteBatch* write_batch = BuildBatchGroup(&last_writer);

....

....

{

mutex_.Unlock();

// This is a slow operation

status = log_->AddRecord(WriteBatchInternal::Contents(write_batch));

if (status.ok()) {

// Adds the batch to memtable

status = WriteBatchInternal::InsertInto(write_batch, mem_);

}

mutex_.Lock();

....

....

}

}

while (true) {

Writer* ready = writers_.front();

writers_.pop_front();

if (ready != &w) {

ready->status = status;

ready->done = true;

ready->cv.Signal();

}

if (ready == last_writer) break;

}

// Notify new head of write queue

if (!writers_.empty()) {

writers_.front()->cv.Signal();

}

return status;

}

In this function, every application thread that attempts key insertion becomes a writer thread and pushes a writer object to the writers_ deque. While one of the writers (say w1) is inside the critical section (BuildBatchGroup), other concurrent writers (say w2, w3, w4, …) will get stuck at the line MutexLock l(&mutex_);. When w1 unlocks the mutex and initiates appending to the log and memtable, the writes waiting on the mutex will now have access to the writers_ queue and push their write objects to it. However, these writers cannot proceed further due to the next while loop, as they are waiting for w1 to exit this function. W1 will notify the next writer (w2) at the front of the queue. At this point, w2 will enter the critical section and group multiple write batches with single records into a larger write batch containing multiple records or key-value pairs.

So that’s it for this entry of the LevelDB blog. In the next post, I will cover read operation and versions. For curious readers, here is a brief description about versions.